Visual checks for the output of tuneLearnFast()

check.learnFast.RdProvides some visual checks to verify whether the Brent optimizer used by tuneLearnFast() worked correctly.

# S3 method for learnFast check(obj, sel = NULL, ...)

Arguments

| obj | the output of a call to |

|---|---|

| sel | integer vector determining which of the plots will be produced. For instance if |

| ... | currently not used, here only for compatibility reasons. |

Value

It produces several plots.

Details

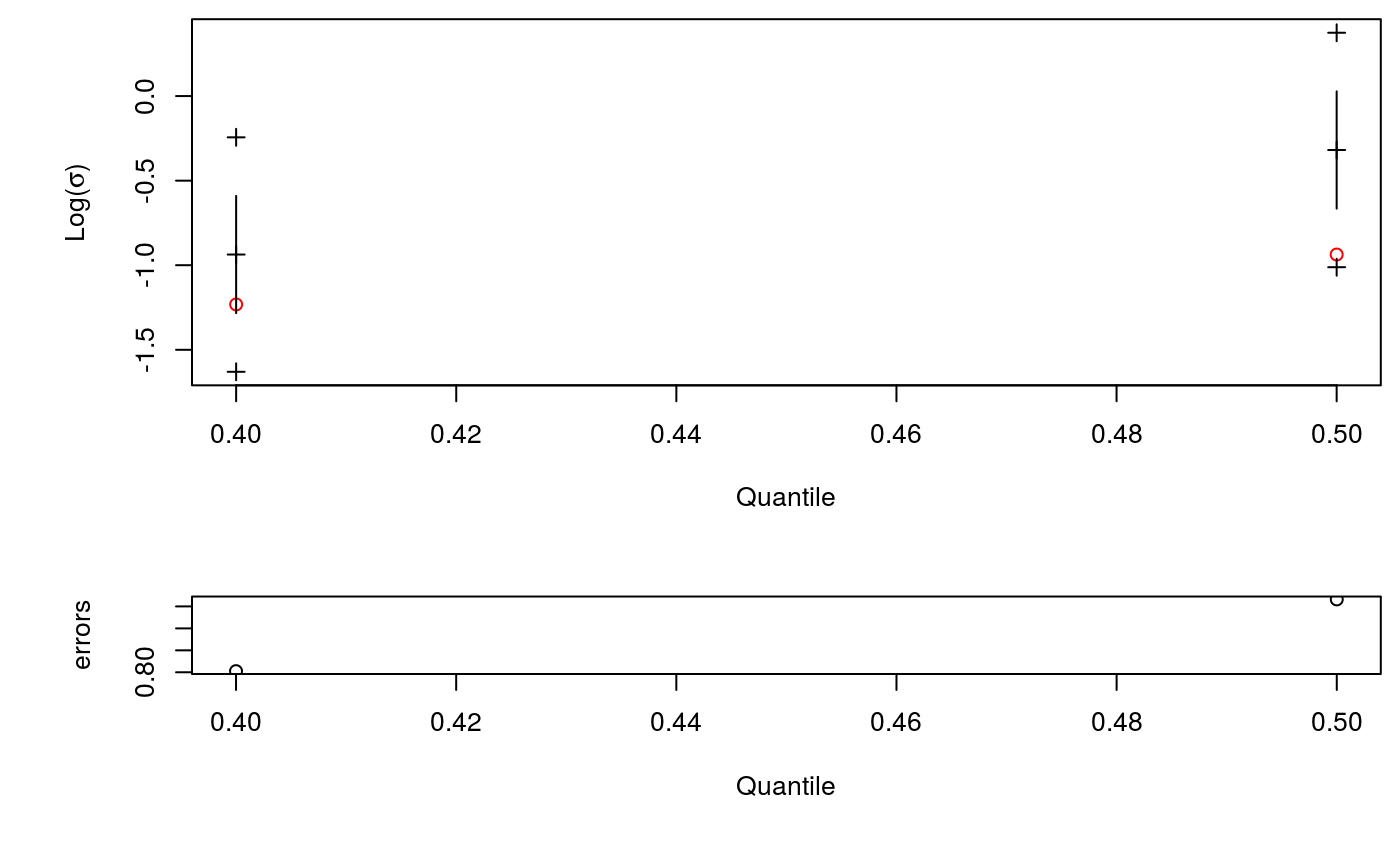

The top plot in the first page shows the bracket used to estimate log(sigma) for each quantile.

The brackets are delimited by the crosses and the red dots are the estimates. If a dot falls very close to one of the crosses,

that might indicate problems. The bottom plot shows, for each quantile, the value of parameter err used. Sometimes the algorithm

needs to increase err above its user-defined value to achieve convergence. Subsequent plots show, for each quantile, the value

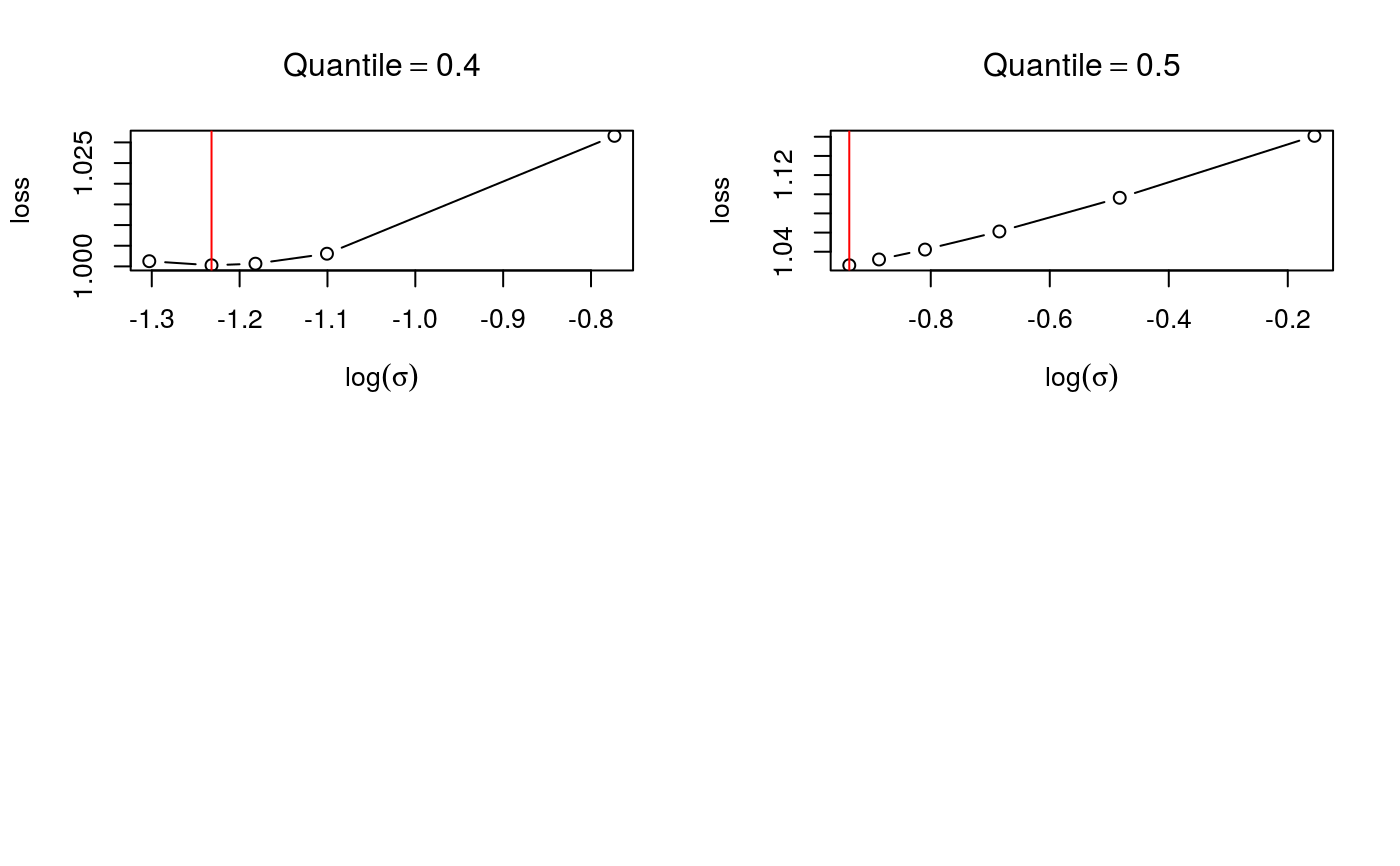

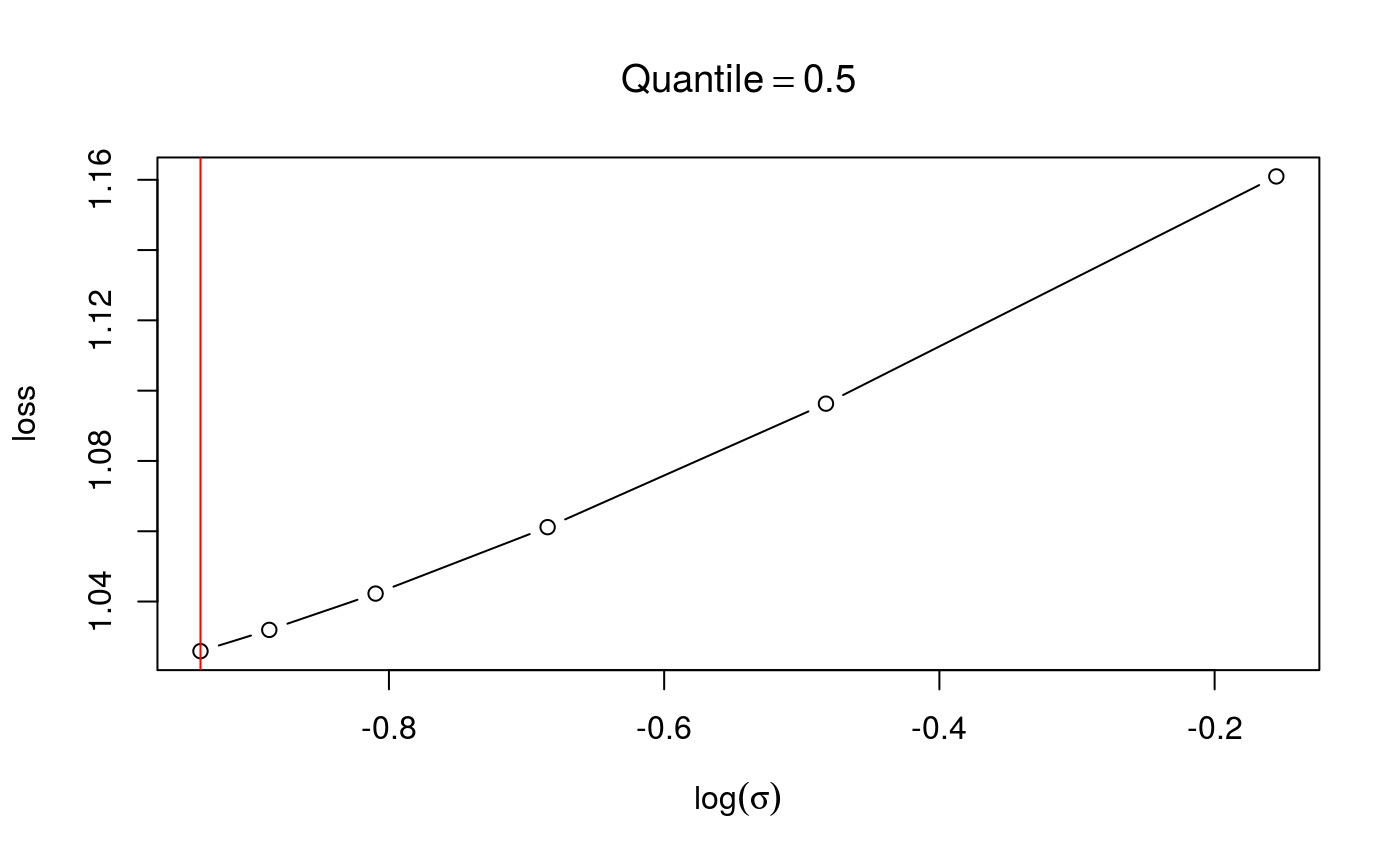

of the loss function corresponding to each value of log(sigma) explored by Brent algorithm.

References

Fasiolo, M., Goude, Y., Nedellec, R. and Wood, S. N. (2017). Fast calibrated additive quantile regression. Available at https://arxiv.org/abs/1707.03307.

Examples

#> Gu & Wahba 4 term additive modelb <- tuneLearnFast(y ~ s(x0)+s(x1)+s(x2)+s(x3), data = dat, qu = c(0.4, 0.5), control = list("tol" = 0.05)) # <- sloppy tolerance to speed-up calibration#> Estimating learning rate. Each dot corresponds to a loss evaluation. #> qu = 0.5......done #> qu = 0.4.....donecheck(b)